Indirect Supervised Learning of

Strategic Generation Logic

Pablo Ariel Duboue

Submitted in partial fulfillment of the

requirements for the degree

of Doctor of Philosophy

in the Graduate School of Arts and Sciences

COLUMBIA UNIVERSITY

2005

©2005

Pablo Ariel Duboue

All Rights Reserved

Abstract

Indirect Supervised Learning of

Strategic Generation Logic

Pablo Ariel Duboue

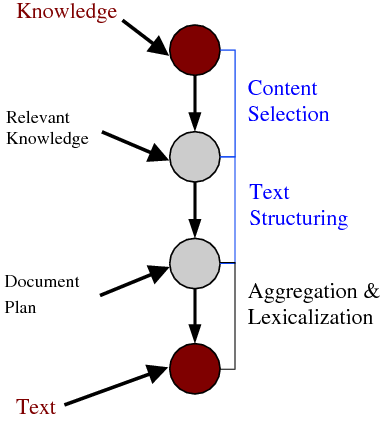

The Strategic Component in a Natural Language Generation (NLG) system is responsible for determining content and structure of the generated output. It takes a knowledge base and communicative goals as input and provides a document plan as output. The Strategic Generation process is normally divided into two subtasks: Content Selection and Document Structuring. An implementation for the Strategic Component uses Content Selection rules to select the relevant knowledge and Document Structuring schemata to guide the construction of the document plan. This implementation is better suited for descriptive texts with a strong topical structure and little intentional content. In such domains, special communicative knowledge is required to structure the text, a type of knowledge referred as Domain Communicative Knowledge. Therefore, the task of building such rules and schemata is normally recognized as tightly coupled with the semantics and idiosyncrasies of each particular domain. In this thesis, I investigate the automatic acquisition of Content Selection rules and the automatic construction of Document Structuring schemata from an aligned Text-Knowledge corpus. These corpora are a collection of human-produced texts together with the knowledge data a generation system is expected to use to construct texts that fulfill the same communicative goals as the human texts. They are increasingly popular in learning for NLG because they are readily available and do not require expensive hand labelling. To facilitate learning I further focus on domains where texts are also abundant in anchors (pieces of information directly copied from the input knowledge base). In two such domains, medical reports and biographical descriptions, I have found aligned Text-Knowledge corpus for my learning task. While aligned Text-Knowledge corpora are relatively easy to find, they only provide indirect information about the selected or omitted status of each piece of knowledge and their relative placement. My methods, therefore, involve Indirect Supervised Learning (ISL), as my solution to this problem, a solution common to other learning from Text-Knowledge corpora problems in NLG. ISL has two steps; in the first step, the Text-Knowledge corpus is transformed into a dataset for supervised learning, in the form of matched texts. In the second step, supervised learning machinery acquires the CS rules and schemata from this dataset. My main contribution is to define empirical metrics over rulesets or schemata based on the training material. These metrics enable learning Strategic Generation logic from positive examples only (where each example contains indirect evidence for the task).

This thesis has been possible with the continuous support of many, many friends and colleagues. Sometimes just a word of encouragement, a smile in the corridor was all what it takes to keep the flow of effort through the days.

First and foremost, I would like to thank my family, in special my parents Ariel and Patricia, and my sister Carolina for their continuous support all through the years. I dedicate this thesis to them. To my extended family, in particular to Jaime, Mema, Victor, Ana, Vicky, Conri, Marina, Gusti, also my deepest thanks.

This thesis has been possible through the continuous guidance and support of my advisor, Prof. Dr. Kathleen R. McKeown. I have been blessed of having Kathy as my advisor. She is not only extremely knowledgeable in the subject matter of this dissertation, but I do believe she as also able to let out the best in each of her students. When Kathy speaks, she is the voice of the community speaking. Every time I had to change something in a paper following her advice, I find out later at conferences that her advice was well motivated. For all these years, thanks Kathy.

My thesis committee contained an unusual rainbow of skills that evaluated and contributed criticism to this thesis from different, varied perspectives. The committee chair, Prof. Dr. Julia Hirschberg has asked me some of the toughest research questions I have been asked in my life. Questions that kept me thinking for months. Questions for which I may spend years after having finished this thesis still looking for answers. I am most thankful to Julia for sharing her continuous scientific curiosity with me. My external committee members Prof. Dr. Dan Jurafsky and Dr. Owen Rambow both offered different perspectives over the subjects dealt in this dissertation. Dan made a many comments about the indirect supervised part. His comments helped me shape the problem in the way it is presented today. Owen was the other NLG person in the committee (aside from Kathy). I have admired Owen’s work since my candidacy exam and it has been a pleasure to have him in my committee. Finally, Prof. Dr. Tony Jebara contributed his deep knowledge in Machine Learning in several, different ways. I have learned a lot from Tony, both in the technical aspects of Machine Learning and in the inter-personal issues related to communicating with researchers in that community. I just wish I have had started interacting with Tony earlier in this dissertation. The machine learning part would be definitely better.

Special thanks go for Smara, Michel and Tita. This thesis wouldn’t be here without their continuous support through the years. Seriously. You know that very well. Thanks, thanks, thanks.

To my friends at the CU NLP Group: Michel Galley, Smaranda Muresan, Min-Yen Kan, Noemie Elhadad, Sasha Blair-Goldensohn, Gabriel Illouz, Simone Teufel, Hong Yu, Nizar Habash, Elena Filatova, Ani Nenkova, Carl Sable, Dave Evans, Barry Schiffman, James Shaw, Shimei Pan, Hongyan Jing, Regina Barzilay, Judith Klavans, Owen Rambow, Vassielios Hatzivassiloglou, David Elson, Brian Whitman, Dragomir Radev, Melissa Holcombe, Greg Whalen, Rebecca Passonneau, Sameer Maskey, Aaron Harnly, John Chen, Peter Davis, Melania Degeratu, Jackson Liscombe and Eric Siegel. To the GENIES Team, in special to Andrey Rzhetsky. To the MAGIC Team, in special to Dr. Desmond Jordan. To the Columbia and Colorado AQUAINT team for creating the environment where half of this thesis flourished, in particular to James Martin, Wayne Ward and Sameer Pradham.

To my friends at the Computer Science Department: Amélie Marian (et Cyril!), Sinem Guven, Guarav Kc, Panos (Panagiotis Ipeirotis), Liz Chen, Kathy Dunn, Alpa Shah, Gautam Nair, Anurag Singla, Tian Tian Zhou, Blaine Bell, Rahul Swaminathan, Vanessa Frias, Maria Papadopouli, Phil Gross, Katherine Heller, Jennifer Estaris, Eugene Agichtein and Gabor Blasko thanks for their friendship and support. My deepest thanks to Martha Ruth Zadok, Anamika Singh, Twinkle Edwards, Remiko Moss and Patricia Hervey. To my officemates: Liu Yan, Lijun Tang, dajia xiexie. Special thanks to the professors Luis Gravano, David Sturman, Bernie Yee, Bill Noble, and Sal Stolfo. Finally, to to my former students Howard Chu, Yolanda Washington, Min San Tan Co, Ido Rosen and Mahshid Ehsani, from which I learned much than what they might learned from me, most certainly.

To the many friends at Columbia University that keep me away from screens and keyboards and let me regain some of my lost sanity. Special thanks to Fabi (Fabiola Brusciotti), Sato (Satoko Shimazaki), Cari (Carimer Ortiz), Al (Alberto Lopez-Toledo), Steve (Steven Ngalo), Sila Konur, Daniel Villela, Ana Belén Benítez Jiménez, Gerardo Hernández Del Valle, Victoria Dobrinova Tzotzkova, Raúl Rodríguez Esteban. Also to: Tarun Kapoor, Hui Cao, Tammy Hsiang, Maria Rusanda Muresan, Priya Sangameswaran, Shamim Ara Mollah, Ivan Iossifov, Su-Jean Hwang, Enhua Zhang, Kwan Tong, Barnali Choudhury, Manuel Jesus Reyes Gomez, Isabelle Sionniere.

To the CRF staff, specially Daisy, Mark and Dennis, for allowing me to terrorize their computer facilities and cleaning after my mess.

I would like also to thank also my friends in Argentina, for their many years of support. In particular, special thanks to Maximal (Maximiliano Chacón), Alvarus (Álvaro De La Rua), Juancho (Juan Lipari), Juanjo (Juan José Gana), Erne (Ernesto Cerrate) and El Unca (Nicolás Heredia), for standing my continuous rantings over non-issues and always receiving me with an open container of unspecified beverages. Also thanks to my friends Diego Pruneda, Martin Lobo, Victoria de Marchi, Paola Allamandri, Andrea Molas, Carlos Abaca, Virginia Depiante, Dolores Table, Alejandra Villalba, Shunko Rojas, Eugenia Rivero, Andriana Quiroga, Clarita Ciuffoli, Maximiliano Oroná, Mariana Cerrate, Gustavo Gutierrez, Facundo Chacón, Paula Asis and Hernán Cohen for being my friends in spite of the distances.

To my friends at Fa.M.A.F.: Gabriel Infante López, Sergio Urinovksy, Nicolás Wolovick, Guillermo Delgadino, Tamara Rezk, Mirta Luján, Pedro Sánchez Terraf and Martin Dominguez, for making me feel I never left. To the professors Javier Blanco, Daniel Fridlender, Francisco Tamarit, Pedro D’Argenio and Oscar Bustos, for their friendship over the years. To my former students: Paula Estrella and Jimena Costa, may the luck of the draw with their next advisors be more favorable to them.

To my friends at UES21: Daniel Blank, Alejandra Jewsbury, Cristina Álamo and to my former students José Norte, Rolando Rizzardi, Federico Gomez, Liliana Novoa and Manuel Diomeda.

To my Argentinian friends in or around NYC: Matt (Matias Cuenca Acuña) and Cecilia Martinez for their continuous support even if I tend to disappear well too often, Alejandro Troccoli, Agus (Agustín Gravano), Quique (Sebastián Enrique), Nicolás Bruno, Guadalupe Cabido, Miguel Mosteiro, Paola Cyment, Marcelo Mydlarz, Tincho (Martín Petrella) and Nacho (Ignacio Miró).

To my Argentinian friends around the world: Patricia Scaraffia and Walter Suarez for being part of my extended family, Horacio Saggion, Fernando Cuchietti and Soledad Búcalo, Paula Sapone, Silvia Seiro, and family, Rainiero Rainoldi, and Alejandra González-Beltrán.

To Tita, Coco and Marina, for being my family away from home.

To my friends from the Association for Computational Linguistics: Anja Belz, Maria Lapata, Nikiforos Karamanis, Roger Evans, Michael White, Gideon Mann, Tomasz Marciniak, Roxana Girju, Maarika Traat, Francesca Carota, Stephen Wan, John Atkinson, Paul Piwek, David Reitter, Nicolas Nicolov, Karin Verspoor, Monica Rogati, Dan Melamed, Clare Voss, Roy Byrd, Srinivas Bangalore, Satoshi Sekine, Aggeliki Dimitromanolaki, Farah Benamara, Mila Ramos-Santacruz, Aline Villavicencio, Sonja Niessen, Rada Mihalcea, Ingrid Zukerman, Mona Diab, Rui Pedro Chaves, Nawal Nassr, Esther Sena, Sara Mendes. Many of you helped directly or indirectly to the completion of this dissertation. I look forward further collaboration in the years to come.

To my friends: Annie, Ellen, Xiao Ma, Raúl, Claudio, Sylvia, Marcos, Gimena, Bella, Grace. Maringela, Marylu, Flavia, Aparna, for being my friends in unusual circumstances.

For helping me go through this ordeal at different moments in my life: Lily, Kaming, Carolinita, Niina, Viara, Anushik, Jessica, Alba, Tiantian, Chihiro, Roxy, Keunyoung, Jael, Annie, Sepide, Ale, Patty, Vika, Patty, Luisa, and Cyd. My fondest memories are with each of you.

To my former suite-mates: Lillian, Yen, Majid, Stephen, Onur, Sakura, Miho, Susie, Ansel, Victoria, Arnaud, Gurol, Chihiro, Ramiro, Vikren, Heather, Muhammad, Veronica, Liz, and Melina. You guys taught me how to make the life in New York City a thrilling experience. May God brightens your path in life.

To my new colleagues at IBM: Jennifer Chu-Carroll, John Prager, Kryzsztof Czuba, Bill Murdock and Chris Welty, thanks for bearing the deposit frenzy. I look forward working with you. Also thanks to Glenny and Annie for a daily smile at the workplace (and pocky!).

A mis padres, Ariel y Patricia.

A mi hermana preferida, Carolina.

In a standard generation pipeline, any non-trivial multi-sentential/multi-paragraph generator will require a complex Strategic Component,1 responsible for the distribution of information among the different paragraphs, bulleted lists, and other textual elements. Information-rich inputs require drastic filtering, resulting in a small amount of the available data being conveyed in the output. Moreover, building a strategic component is normally tightly coupled with the semantics and this depends on the idiosyncrasies of each particular domain.

Traditionally, Strategic Generation is divided into two subtasks: Content Selection, i.e., choosing the right bits of information to include in the final output, and Document Structuring, i.e., organizing the data in some sensible way. The overall goals of Strategic Generation are to produce text that is at the same time coherent (marked by an orderly, logical, and aesthetically consistent relation of parts; this measure relates to the structuring subtask), concise (expressing much in few words; this measure arguably relates to both subtasks, and it is not always a requirement) and appropriate (meant or adapted for an occasion or use; this measure relates to the selection subtask).

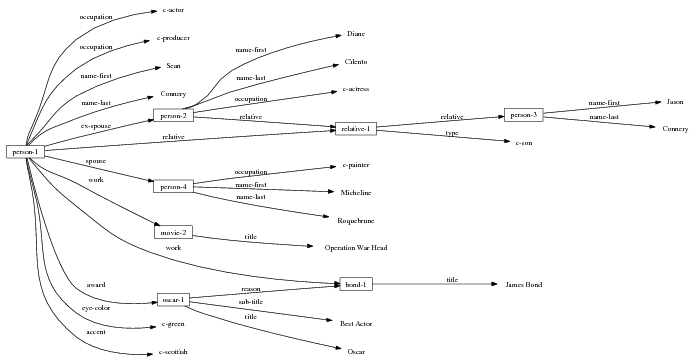

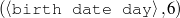

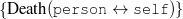

Figure 1.1, an example of the strategic generation task, shows the two main elements of input to a generation system: the input knowledge base (a set of facts, e.g., ex-spouse(person-1, person-2)) and the communicative goal (e.g., “Who is Sean Connery,”2 or “Convince the Hearer that Sean loves Micheline,”3). The two example text excerpts differ at the content level (as opposed to differences at the word level, for example). Both of the aforementioned subtasks of the strategic component can be seen in this example. The first text is not only wrongly structured, but also contains facts that are irrelevant for the given communicative goal (e.g., eye-color) while failing to mention important ones (e.g., occupation). In contrast, the second text presents a much more felicitous selection of content, in addition to linking the facts in a reasonable and natural way.

The example also illustrates some related concepts in the strategic generation literature. For instance, there is a rhetorical relation of Cause between the facts award(person-1,oscar-1) and work(person-1,bond-1). The cue phrase “because” makes this relation explicit. The output of the Content Selection step is the relevant knowledge pool that in the example does not contain eye-color. The document plan is a sequence of messages, where each message is the instantiation of a rhetorical predicate using the input knowledge as arguments. In the example, intro-person(..) is a predicate with arguments first-name, last-name, occupation.4 On the other hand, intro-person(person-1) is a message —verbalized as “Sean Connery is an actor and a producer.”

Communicative Goal:

Who is Sean Connery? Inform(person-1) Knowledge Base: Document Plan (Message Sequence):

[ intro-person(person-1), ] [ ex-spouse(person-1,person-2),

intro-person(person-2), spouse(person-1,person-3),

intro-person(person-3), ] [ child(person-1,person-4),

intro-person(person-4), ] [ movie(bond-1,person-1),

intro-award(oscar-1,person-1) ] Possible Schema:

intro-person(self), |name-first(person-1,‘Sean’) name-last(person-1,‘Connery’)

occupation(person-1,c-actor) occupation(person-1,c-producer)

ex-spouse(person-1,person-2) spouse(person-1,person-4)

name-first(person-2,‘Diane’) name-last(person-2, ‘Cilento’)

occupation(person-2,c-actress) name-first(person-4,‘Micheline’)

name-last(person-4,‘Roquebrune’) relative(person-1,c-son,person-3)

occupation(person-4,c-painter) relative(person-2,c-son,person-3)

name-first(person-3,‘Jason’) name-last(person-3,‘Connery’)

work(person-1,bond-1) title(bond-1,‘James Bond’)

work(person-1,movie-2) title(movie-2,‘operation warhead’)

award(person-1,oscar-1) title(oscar-1,'Oscar')

sub-title(oscar-1,‘Best Actor’) reason(oscar-1,bond-1)

eye-color(person-1,c-green) accent(person-1,c-scottish)

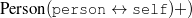

Compare:

(spouse(self,spouse), intro-person(spouse);

{ child(spouse,self,child), intro-person(child) }

| )⋆

(movie(self,movie), intro-movie(movie);

{ award(movie,self,award),

intro-award(award,self) } | )⋆

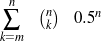

The difficulty of the strategic generation task resides in the fact that, without prior

knowledge, any ordering in a subset of the input is a possible document plan. Since the planner

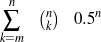

can select any number k of facts between 1 and n (in  ways) and then reorder each such set

in k! ways, there are ∑k=2n

ways) and then reorder each such set

in k! ways, there are ∑k=2n possible plans. This large number of possibilities

makes for a very challenging task, that needs to be approximated with strong domain

heuristics.

possible plans. This large number of possibilities

makes for a very challenging task, that needs to be approximated with strong domain

heuristics.

These domain heuristics depend on the type of the target texts. Of particular importance to this dissertation are texts that exhibit a fixed structure that can be explained by tracking the evolution of discourse in a field over time, but can not be explained with the information the text contains nor with domain knowledge itself. That is the case, for example, in discourse reporting or summarizing factual information. In such cases, the extra knowledge required to structure documents in these domains has been named Domain Communicative Knowledge or DCK (Kittredge et al., 1991). In the medical domain, therefore, we can distinguish domain independent knowledge (e.g., people have diseases that can be treated with surgery), domain specific knowledge (e.g., a surgery patient needs to be anesthetized), from DCK (e.g., in medical reports about bypass surgeries, the anesthestetics information should start the description of the surgery, right after the description of the patient). It is clear that DCK helps reduce the large number of orderings that can be expected a priori to a manageable set of feasible possibilities.

Even though there are general tools and techniques to deal with surface realization (Elhadad and Robin, 1996, Lavoie and Rambow, 1997) and sentence planning (Shaw, 1998, Dalianis, 1999), the inherent dependency on each domain makes the Strategic Generation problem difficult to deal with in a unified framework. My thesis builds on machine learning in an effort to provide such a tool to deal with Strategic Generation in an unified framework; machine learning techniques can bring a general solution to problems that require customization for every particular instantiation.

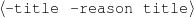

The work described in this thesis investigates the automatic acquisition of Strategic Generation logic,5 in the form of Content Selection rules and Document Structuring schemata, from an aligned Text-Knowledge corpus. A Text-Knowledge corpus is a paired collection of human-written texts and structured information (knowledge), similar to the knowledge base the generator will use to generate a text satisfying the same pragmatic (i.e., communicative) goals being conveyed in the human input text. For example, weather reports for specific dates may be paired with weather prediction data for each of those dates. The construction of such corpora is normal practice during the knowledge acquisition process for the building of NLG systems (Reiter et al., 2000). These corpora are increasingly popular in learning for NLG because they are readily available and do not require expensive hand labelling. However, they only provide indirect information about whether each piece of knowledge should be selected or omitted or the actual document structure. Indirect Supervised Learning (ISL) is my proposed solution to this problem. ISL has two steps; in the first step, the Text-Knowledge corpus is transformed into matched texts, an intermediate structure indicating where in the text each piece of knowledge is appearing (if it appears at all). From the matched texts, a training dataset of selected/omitted classification labels or document plans can be read out, accordingly. In the second step, Content Selection rules or Document Structuring schemata are learned in a supervised way, from the training dataset constructed in the previous step.

My thesis, therefore, receives as input the natural datasets for its learning task, in the form of text and knowledge. Such input is natural for this task, in the sense that this is the same material humans will use to acquire the Strategic Generation logic themselves. This is the type of information a knowledge engineer may use, together with other knowledge sources, to build a Strategic Generation component for a NLG system.

The rest of this chapter will address the definition of my problem in the next section, present my research hypothesis (Section 1.2), summarize my methods (Section 1.3), enumerate my contributions (Section 1.4), and introduce the domains (medical reports, biographical descriptions) where this research is grounded. An overview of each chapter concludes this introduction.

My problem is the learning of control logic for particular implementations of the first two modules in a generation system. The acquired logic has to be appropriate to solve the Strategic Generation problem in isolation and within existing NLG systems. I describe the integration of my technique in existing NLG systems first, by examining my assumed NLG architecture, and then go deeper into the internals of the logic being sought.

As I am automatically acquiring the knowledge necessary for two internal processes inside a NLG system, the assumed architecture of the system is of great importance. I need to consider an architecture abstract enough to allow for a broad range of applications of the rules and schemata but grounded enough to be executable.

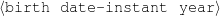

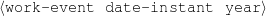

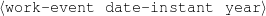

I expect the input data to be provided in a frame-based knowledge representation formalism. Each frame is a table of attribute-value pairs. Each attribute is unique, but it is possible to have lists as values. As such, the values can be either atomic or list-based. The atomic values I use in my work are Numeric (either integer or float); Symbolic (or unquoted string); String (or quoted string); and frame references (a distinguished symbolic value, containing the name of another frame in the frameset).6 The list-based types are lists of atomic values. Each frame has a unique name and a distinguished TYPE feature. This feature has a symbolic filler that can be linked to an ontology, if provided.

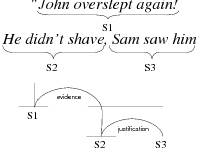

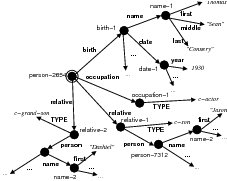

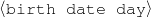

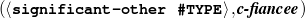

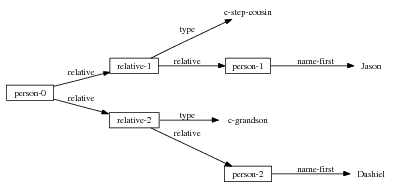

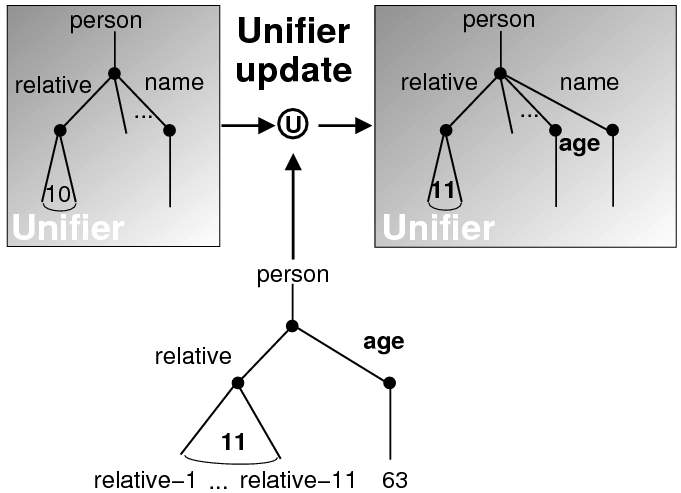

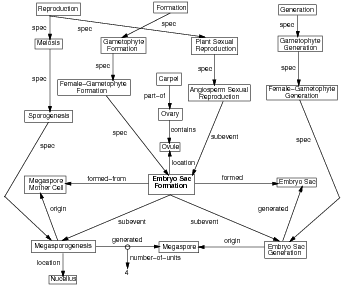

Because my knowledge representation allows for cycles, the actual knowledge representation can be seen as a directed graph: each frame is represented as a node and there are edges labeled with the attribute names joining the different nodes. Atomic values are also represented as special nodes. From this standpoint, the knowledge base of Figure 1.1 is just a factual rendering of the underlying representation. The actual knowledge base would be as shown in Figure 1.2.

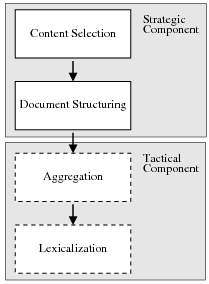

Figure 1.3 shows my assumed two-stage NLG architecture. The Strategic Component is divided into the two modules I am learning. The figure also highlights the interaction between Strategic Generation and both aggregation and lexicalization in the Tactical Component, as they pose challenges to my learning system. The aggregation module will take a list of aggregation chunks (each containing a number of messages) and produce as output a list of sentences (each sentence containing one or more messages). The lexicalization module changes messages into words; it encompasses referring expressions, lexicalization and surface realization. Therefore, aggregation and lexicalization will re-order messages locally; when observing text as evidence of document plans the original ordering will be distorted. I will now discuss Content Selection and Document Structuring as they are the focus of this dissertation.

The Content Selection module takes the full knowledge base as input and produces a projection (a subset) as output. This module should also consider the communicative goal when building the output subset. The output of the Content Selection module has been termed the relevant knowledge pool (McKeown, 1985), viewpoints (Acker and Porter, 1994) or generic structure potential (Bateman and Teich, 1995).

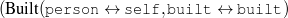

A possible implementation of a Content Selection module uses rules to decide whether

or not to include a piece of information. This decision is based solely on the semantics of the

data (e.g., the relation of the information to other data in the input). These rules take as

input a node in the knowledge representation graph and execute a predicate on it

(f : node → ).

).

The decision of whether to include a given piece of data is done solely on the given data (no text is available during generation, as the generation process is creating the output text).7 The current node and all surrounding information are useful to decide whether or not to include a piece of data. For example, to decide whether or not to include the name of a movie, whether the movie was the reason behind an award (and the award itself) may be of use. Such a situation can be addressed with the rules defined below.

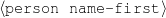

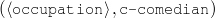

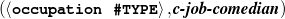

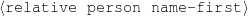

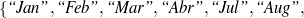

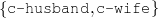

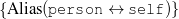

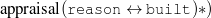

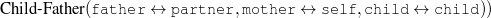

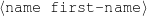

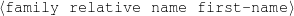

While I experimented with a number of rule languages, I will describe here the tri-partite rule language, a solution that exhibits the right degree of simplicity and expressive power to capture my training material in the biographical profiles domain. Other domains may require a more complex rule language but, in practice, the tri-partite rules are quite expressive and very amenable for learning.

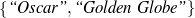

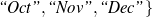

Tri-partite rules select a given node given constraints on the node itself and a second

node, at the end of a path rooted on the current node. For the constraints on nodes, I have used

two particular type of constraints: whether or not the value of the node belongs to a particular

set (e.g., value ∈ ) or a special (True) constraint that

always selects the item for inclusion (the absence of any rule that selects a node

is equivalent to a False rule). Again, more complex constraints are possible, but

these types of constraints are easily learnable. Some example rules are detailed in

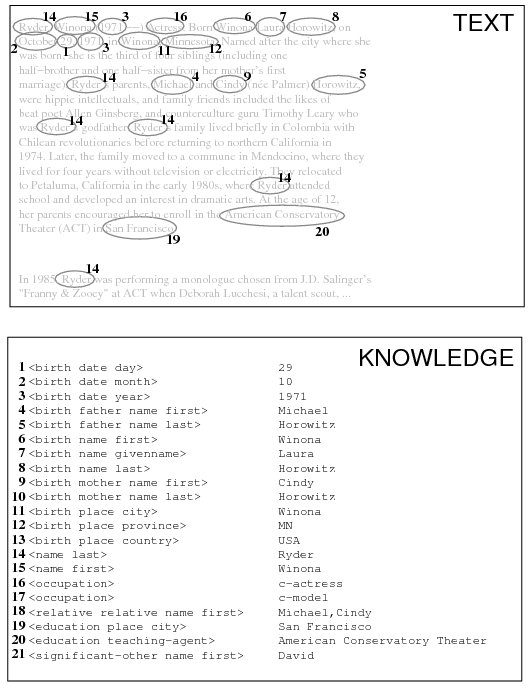

Figure 1.4.

) or a special (True) constraint that

always selects the item for inclusion (the absence of any rule that selects a node

is equivalent to a False rule). Again, more complex constraints are possible, but

these types of constraints are easily learnable. Some example rules are detailed in

Figure 1.4.

(-, -, -). ;Select-All

(-, -, -). ;Select-All

Always say the first name of the person being described.

: (false, -, -). ;Select-None

: (false, -, -). ;Select-None

Never say the eye color of the person being described.

: (value ∈

: (value ∈ , -,-).

, -,-).

Only mention the name of an award if it is whether a Golden Globe or an Oscar.

: (-,

: (-, ,value ∈

,value ∈ ).

).

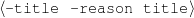

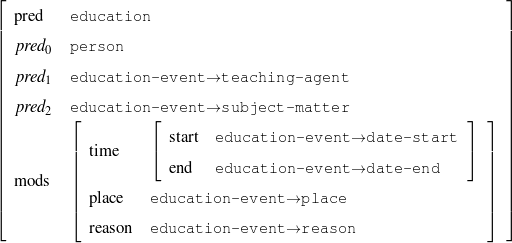

Only mention the title of a movie if the movie received an Oscar or a Golden Globe.

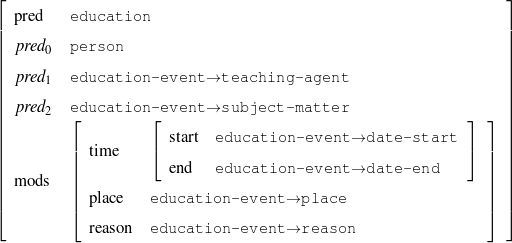

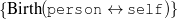

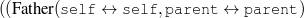

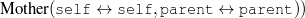

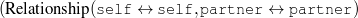

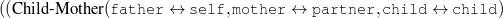

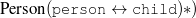

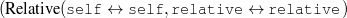

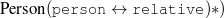

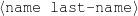

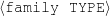

A schema is a particular solution to the Document Structuring task, a task that takes as input a subset of the knowledge base (the relevant knowledge pool) and returns a sequence of messages (a document plan). These messages are produced by communicative predicates (Figure 1.5) composed of three items: variables, properties and output. Each variable has a type, which further constrains the possible values it can take. The actual number and nature of these predicates varies from domain to domain. A predicate can be considered as a function that takes a number of defined (and maybe undefined) variables and searches the knowledge representation for values of the undefined variables that satisfy the constraints inside the predicate. If none are found (or if the provided variables do not satisfy the constraints), the predicate cannot be instantiated. For each set of values8 that satisfy its constraints, the predicate produces a message (Figure 1.6), a data structure assembled using the variable assignment found during the search. The messages are the nexus between the schema and the rest of the NLG system. A predicate, therefore, can be thought of in this context as a blueprint for making messages.

| person | : | c-person |

| education-event | : | c-education-event |

Given a set of predicates, a schema (shown in Chapter 5, Figure 5.3) is a finite state machine over the language of predicates with variable references. At each step during schema instantiation, a current node is kept and all the predicates in the edges departing from the current node are instantiated. A focus mechanism will then select the next node (and add the message to the document plan). The instantiation process finishes when no new predicate can be instantiated departing from the current node. While the schema itself is simple (an automaton with predicate and variable names on its edges), the instantiation process presents some complexities. Interestingly, my schema induction algorithm is independent of the instantiation process or its internal details. However, this complexity forbids using existing learning techniques for finite state machines to learn the schemata.

Schemata are explained in detail in Chapter 2 (McKeown’s original definition, Section 2.2.1) and Chapter 5 (my schemata implementation, Section 5.1).

My research hypothesis is three-fold. First, I share (McKeown, 1983)’s original research hypothesis that the text structure is usually different from the knowledge structure.9 I refer to the structure of the knowledge as domain orderings, such as time or space. This type of information controls some of the placement of information in the text, e.g., news articles about a certain event enumerate some events chronologically (Barzilay et al., 2001). However, these orderings cannot be expected a priori for every domain and, in general, text structure is not governed by them.

Second, as this dissertation focuses on learning schemata, my main hypothesis is centered on the feasibility of automatically constructing schemata from indirect observations, using shallow methods. Indirect observations refer to learning schemata from positive examples, contained in a Text-Knowledge corpus. I have this corpus instead of a fully supervised training material (in the form of document plans or sequences of predicates). Text has a linear structure, defined by the fact that words come one after another. Even though exact word placement is misleading (as it interacts with aggregation and lexicalization), I can match text and knowledge and migrate the text linear structure to the knowledge. In that sense, my hypothesis implies that shallow text analysis methods can be used to acquire Domain Communicative Knowledge (cf. Section 2.2, Chapter 2) for schema construction. That is, to gain information about the domain behavior in general, via Indirect Supervised Learning as described in the next section. This level of analysis lets me gain information about the behavior of the domain in general, but not necessarily solve an understanding task for each particular text.

Finally, part of my research hypothesis is that schemata are useful as a learning representation. Their simplicity, a fact that has been criticized in the literature (Zock, 1986, Hovy, 1993), make them a prime candidate for learning. Moreover, the fact they are learnable should shed more light on their empirical importance (already highlighted by the number of deployed NLG systems employing them (Paris, 1987, Maybury, 1988, Bateman and Teich, 1995, Lester and Porter, 1997, Milosavljevic, 1999)).10

The input to my learning system is thus knowledge and text. For the task of learning Strategic Generation logic, this is a supervised setting; the learning system is presented with the input to Strategic Generation (knowledge) and text that is determined from the output of the strategic generation component (relevant knowledge and document plans). As the text is not the output of the Strategic Generation component, but something that can be derived from the output of the strategic component, my solution to this problem is Indirect Supervised Learning, which I explain at length in Chapter 3, Section 3.2.

As mentioned in Section 1.1, the output of the Strategic Generation component is defined as follows: the relevant knowledge pool is a subset of the knowledge base, which I assume is a frame-based knowledge representation. The document plan is a sequence of messages (rhetorical predicates instantiated from the relevant knowledge pool), segmented into paragraphs and aggregation sets.11

To obtain the relevant knowledge pool and the document plan, I build without human intervention an intermediate structure, the matched text, by using assumptions on how knowledge can be verbalized within the text. These matched texts are built without any examples of actual matched texts (unsupervised learning). For example, the first sentence of the biography in Figure 1.1 will produce the following matched text12 when matched against the knowledge base shown in the figure:

name-first(person-1,'Sean')name-last(person-1,'Connery') is an occupation(person-1,c-actor) and a occupation(person-1,c-producer)

With the matched text in hand, it is easy to see which knowledge has been selected for inclusion in the text: any piece of knowledge matched to a text segment is thus assumed to be selected by the human author for inclusion in the text. Having now a task (Content Selection) with training input (KB) and output (KB plus selection labels) pairs, this comprises a well defined learning problem, where Content Selection rules can be learned.

Continuing with the example, let’s suppose we have two biographies:

The first biography is a business-style biography, while the second one is a family-style biography. For each style, the matched text provides labels for each fact in the knowledge base. These labels (selected or omitted) are shown in Figure 1.7 for the business-style biography.

|

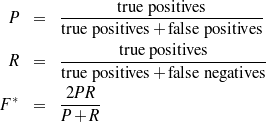

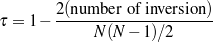

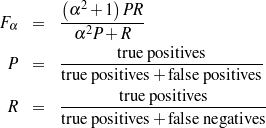

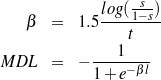

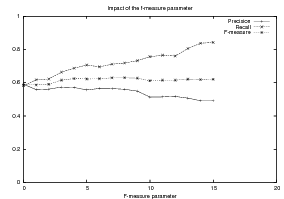

To choose among the different possible rulesets (e.g., ruleset  1 and ruleset

1 and ruleset  2), I look

at the information retrieval task of retrieving the labels (selected, omitted) for each piece of

knowledge in the input knowledge base. The F*-measure from information retrieval (van

Rijsbergen, 1979) of this retrieval task can be used as a likelihood for each ruleset. The

supervised learning step becomes searching for the ruleset that maximizes this likelihood.

Therefore, if the F*-measure of the labels obtained by applying

2), I look

at the information retrieval task of retrieving the labels (selected, omitted) for each piece of

knowledge in the input knowledge base. The F*-measure from information retrieval (van

Rijsbergen, 1979) of this retrieval task can be used as a likelihood for each ruleset. The

supervised learning step becomes searching for the ruleset that maximizes this likelihood.

Therefore, if the F*-measure of the labels obtained by applying  1 to the training set

is greater than the F*-measure of applying

1 to the training set

is greater than the F*-measure of applying  2, then

2, then  1 should be preferred over

1 should be preferred over

2.

2.

The matched text also provides input and output for learning schemata: the input to a schemata-based strategic component is the relevant knowledge pool, extracted in the previous Content Selection step. The output of the schema, the document plan, can not directly be extracted from the matched text, but the sequence of matched pieces of knowledge can approximate the messages.

That is, the sequence of pieces of knowledge (facts) extracted for the matched text of the biography shown in Figure 1.1 will read as follows:

[ name-first(person-1) name-last(person-1) occupation(person-1)

occupation(person-1) ] [ ex-spouse(person-1) name-first(person-2)

name-last(person-2,) occupation(person-2) relative(person-2)

name-first(person-3) ] [ spouse(person-1) name-first(person-4)

name-last(person-4) occupation(person-4) ] [ work(person-1)

title(bond-1) reason(oscar-1) award(person-1) title(oscar-1)

sub-title(oscar-1) ]

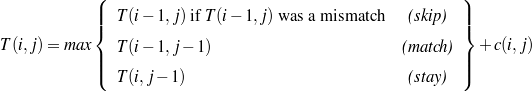

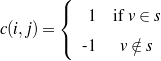

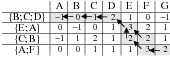

Compare this to the document plan shown in Figure 1.1. As document plans are at the

predicate level, I mine patterns over the placement of atomic pieces of knowledge in

the knowledge sequence extracted from the text (in the example above, I find that

name-first, name-last, occupation

name-first, name-last, occupation is a recurring pattern), mine order constraints

over them and use the constraints to evaluate the quality of document plans from

possible schemata. Finally, to compare sequences of atomic pieces of knowledge to

predicates, I defined a dynamic programming-based metric, explained in Chapter 5,

Section 5.4.2.

is a recurring pattern), mine order constraints

over them and use the constraints to evaluate the quality of document plans from

possible schemata. Finally, to compare sequences of atomic pieces of knowledge to

predicates, I defined a dynamic programming-based metric, explained in Chapter 5,

Section 5.4.2.

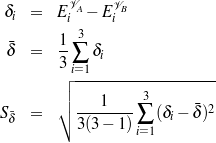

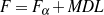

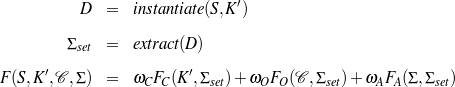

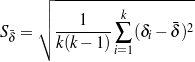

Given a set of relevant knowledge pool–document plan pairs, I define the likelihood of a schema by summing up three terms:

As with Content Selection, defining means to tell good schemata from bad ones renders the learning problem an optimization one.

On technical grounds, Figure 1.8 shows a generalized graphical model13 for my system. There, my observed input (knowledge base) and output (text) are marked in black. The mapping I am interested in learning is “knowledge base” to “relevant knowledge pool” and “relevant knowledge pool” to document plan.

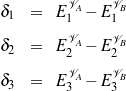

Indirect Supervised Learning involves an unsupervised step, based on assumptions on the structure

of the model, to elucidate the hidden variables and supervised steps to generalize from the constructed

mapping.14

The assumptions on the model I use are related to the ways knowledge can appear on the text.

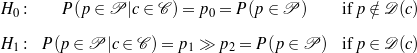

More specifically, I see the knowledge as a collection of atomic items (the concepts), and I see

the text as a collection of phrases. The relation between phrases and concepts is given by a

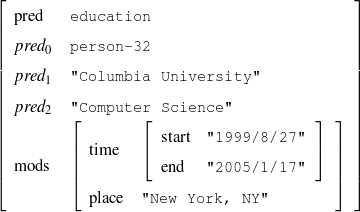

verbalization function  from concepts to sets of phrases (possible verbalizations). The model I

use for the unsupervised part is summarized by the following two tests, where H0 is the null

hypothesis, p and c are particular phrases and concepts,

from concepts to sets of phrases (possible verbalizations). The model I

use for the unsupervised part is summarized by the following two tests, where H0 is the null

hypothesis, p and c are particular phrases and concepts,  is the set of phrases that

make a particular text,

is the set of phrases that

make a particular text,  is the set of concepts that make a particular knowledge

representation (where

is the set of concepts that make a particular knowledge

representation (where  and

and  refer to the same entity) and

refer to the same entity) and  is the verbalization

dictionary:

is the verbalization

dictionary:

Here, H0 says that if a given phrase p is not a verbalization of a given concept c, then knowing that c holds will not change the chances of p appearing in the text. On the contrary, H1 says that is p is a verbalization for c, knowing that c holds makes it much more likely for p to appear in the text.

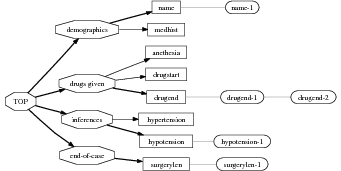

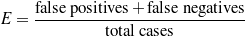

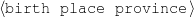

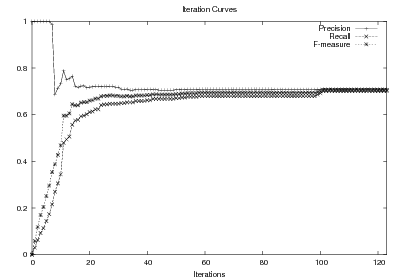

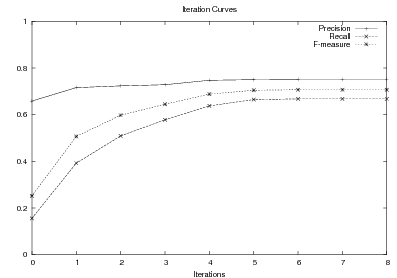

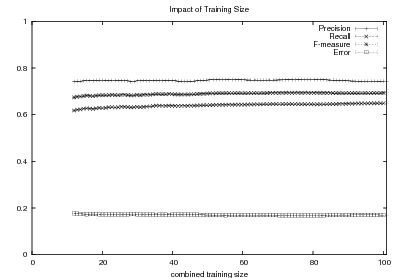

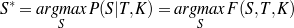

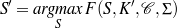

Supervised Learning. As the experiments in Chapter 3 will attest, the matched text construction process is able to identify in an automatic fashion training material with an F*-measure as high as 0.70 and as low as 0.53. These results imply that learning using the matched texts as training material will require a robust machine learning methodology. I will now mention some features common to the supervised learning algorithms presented in Chapter 4 and Chapter 5. Both Content Selection rules and Document Structuring schemata are symbolic and highly structured in nature. In both cases, I have input and output pairs (I,O) to learn them, extracted from the matched text. I am interested in finding the object o* (belonging to the set of all possible Content Selection rules or Document Structuring schemata, in either case) such that o* maximizes the posterior probability given the training material:

Here, instead of computing the probability P(o|I,O), I use the input/output pairs to compute for each putative object o a likelihood f(o,I,O). This likelihood will also allow me to compare among the different o and it is thus a quality function in the representation space. In both cases, I use a similarly defined function: employ the rules or the schemata to generate from the input I a set of outputs O′. The sought quality function becomes the distance between the training output and the produced output, ||O-O′||, for suitable distances.

Given the quality function, finding o implies a search process on the large space of representations. Several algorithms can be of use here (e.g., A*, hill-climbing or simulated annealing). However, given the highly structured nature of my representations, I have found it valuable to define a successor instance coming from two instances in the search pool, instead of one. This type of approach is known as Genetic Algorithms (GAs). In general, I consider GAs as a meaningful way to perform symbolic learning with statistical methods.

System Architecture. The process described above is sketched in Figure 1.9, using the thresholds and parameters15 described in Table 1.1. The Text and Knowledge corpus is fed into a matched text construction process, described in Chapter 3. This process will employ the model with a minimum score on the t-values (thrt) for concepts that appear in at least thrsupp documents. Once some matches have been identified, a disambiguation process using w words around each match will be spanned.

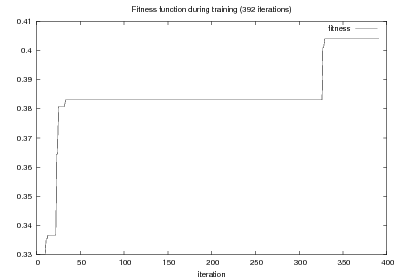

From the matched text, a Content Selection dataset will be used to learn Content Selection rules (presented in Chapter 4). This process is centered about a GA with a population of populationsize. This initial population is built using a bread-first search until depth depth in the knowledge graph. The fitness function will weight precision and recall using a weight of α.

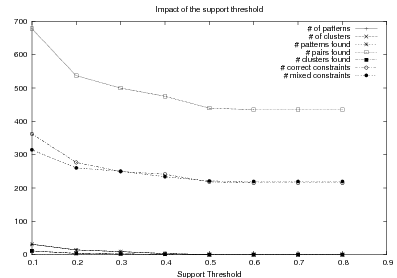

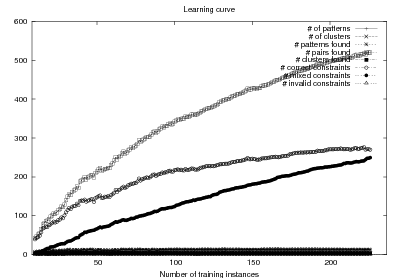

Also from the matched text, sequences of semantic labels are extracted and used to learn Order Constraints (Chapter 5). For this process, only patterns that appear in a supportthreshold sequences are further considered. The mined constraints are only considered if their associated probability is above throc.

Finally, the Content Selection dataset, the Order Constraints, sequences of atomic values extracted from the matched text and rhetorical predicates are all used to learn schemata (Chapter 5). A population of populationsize(2) is used to learn schemata with a maximum of nv variables per type.

| Threshold | Description | Value |

Chapter 3 | ||

| thrt | t-test cut-point | 9.9 |

| thradd | Percentage of the available number of matches to run

the on-line dictionary induction. | 20% |

| thrtop | Number of top scoring matches to add in each step

(computed as a percentage of the total number of

matches). | 10% |

| w | Disambiguation window, in words. | 3 |

| thrsupp | Concept support, in percentage of the total number of

instances. | 20% |

Chapter 4 | ||

| populationsize | Size of the population in the genetic search for

Content Selection rules. | 1000 |

| depth | Depth cut-off for the breath-first search building the

population for the rule search. | 6 |

| α | F-measure weighting. | 2.0 |

| l | Saturation area of the MDL sigmoid function. | 0.99 |

Chapter 5 | ||

| support threshold | Minimum number of sequences a pattern should

match to be further considered (this threshold is

expressed as percentage of the total number of

sequences). | 30% |

| throc | Probability threshold for a given order constraint to

be further considered. | 0.98 |

| nv | Number of variables per type. | 2 |

| windowsize | How many items are used to build a pattern. | 8 |

relative distance

threshold | Clustering parameter when mining order constraints. | 0.1 |

probability

cut-point | Minimum probability for accepting a learned

constraint. | 0.99 |

This thesis puts forward contributions at three levels. First, it contributes by devising, implementing and testing a system for the automatic construction of training material for learning Content Selection and Document Structuring logic. The technique described in Chapter 3 is able to process hundreds of text and knowledge pairs and produce Content Selection training material with quality as high as 74% precision and 67% recall. The Document Structuring material (orderings) it produces is also highly correlated to hand annotated material. This matched texts construction process emphasizes the use of structured knowledge as a replacement for manual tagging. The Text-Knowledge corpus in the biographies domain assembled as part of this thesis is now a valuable resource, available for further research in the area, together with the machinery to obtain new training material in a number of domains discussed in Chapter 9. The evaluation methodology employed in this thesis is also a contribution: using a number of human written texts for evaluation, dividing them into training and test set and using the test set to evaluate both the unsupervised as well as the supervised steps. Alternative approaches will require larger amounts of human-annotated data or will leave the unsupervised part without proper evaluation.

Second, among my contributions are also the proposal and study of techniques to learn Content Selection logic from a training material consisting of structured knowledge and selection labels. As the training material is automatically obtained, it contains a high degree of noise. Here, my contribution includes techniques that are robust enough to learn in spite of this noise. I set the problem as a rule optimization of the F*-measure over the training material. My techniques have elucidated Content Selection rules in four different styles in the biographies domain. Moreover, my experiments in Content Selection contribute to our understanding of the Content Selection phenomenon at several levels. First, it separates nicely the need for off-line (high-level) Content Selection from on-line Content Selection, where the approach described in this thesis could potentially be used to learn Content Selection logic at both levels.16 From a broader perspective, my acquired Content Selection rules provide an empirical metric for interestingness of given facts.

Finally, I defined the problem of learning Document Structuring schemata from indirect observations, proposing, implementing and evaluating two different, yet similar techniques in two different domains. The Document Structuring problem is one of the most complex problems in NLG. My techniques are among the first efforts to effectively learn Document Structuring solutions automatically. At a fine grained level of detail, my main contribution is a dynamic-programming metric that compares sequences of values (that can be read out from text) to sequences of messages (that are produced by the schemata). The acquired schemata are written in a declarative formalism, another contribution of this thesis. Previous implementations of schemata had mixed declarative/procedural definitions that impose a high burden for any learning technique.

I discuss now my experimental domains (Medical Reports and Person Descriptions). These domains were central to research projects I have been involved with. Other potential domains are discussed in Section 9.3, including Biology, Financial Markets, Geographic Information Systems, and Role Playing Games.

MAGIC (Dalal et al., 1996, McKeown et al., 2000) is a system designed to produce a briefing of patient status after the patient undergoes a coronary bypass operation. Currently, when a patient is brought to the intensive care unit (ICU) after surgery, one of the residents who was present in the operating room gives a briefing to the ICU nurses and residents. The generation system uses data collected from the machine in the operating room to generate such a presentation, avoiding distracting a caregiver at a time when they are critically needed for patient care.

Figure 1.10 shows an example of a data excerpt (a data file in the classic formalism with 127 facts on average) and presentation. Several of the resident briefings were collected and annotated for a past evaluation. Each transcription was subsequently annotated with semantic tags as shown in Figure 6.2, on page §.

| Input Knowledge | MAGIC output |

(patient-info-12865, c-patient, (a-age,

age-12865), (a-name, name-12865), (a-gender,

gender-12865), (a-procedure, procedure-12865),

(a-birth-date, …), …)

(age-12865, c-measurement, (a-value, 58), (a-unit, "year")) (gender-12865, c-male) (ht-12865, c-measurement, (a-value, 175), (a-unitm "centimeter")) (name-12865, c-name, (a-first-name, "John"), (a-last-name, "Doe")) (procedure-12865, c-procedure, (a-value, "mitral valve replacement")) … | John Doe is a 58 year-old male patient

of Dr. Smith undergoing mitral valve

repair. His weight is 92 kilograms and his

height 175 centimeters. Drips in protocol

concentrations include Dobutamine,

Nitroglycerine and Levophed. He received

1000 mg of Vancomycin and … |

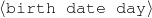

As part of the Question Answering project (AQUAINT) taking place jointly at Columbia University and University of Colorado, I have developed ProGenIE, a system that generates biographic descriptions of persons, taking as input information gathered from the WWW. The biographical descriptions domain is central, as it has available larger amounts of data compared to the medical domain. Because of this data availability, I only pursued Content Selection experiments in this domain.

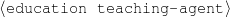

For these experiments, fact-sheet pages and other structured data sources provide the input knowledge base (Duboue and McKeown, 2003b). The texts are the biographies written by professional writers or volunteers, depending on the corpus. Figure 1.11 exemplifies an aligned pair.

This domain is very rich, allowing me to gather multiple biographies for each person I have independently obtained knowledge. Such multi-aligned corpus had also proven useful for mining verbalization templates (Barzilay and Lee, 2002).

| Input Knowledge | Human-written Text |

fact(person42,name,'Sean Connery').

fact(person42,birthname,'Thomas Sean Connery'). fact(person42,birthdate,month('August'),day('25'), fact(person42,birthplace,'Edinburgh, Scotland'). fact(person42,education,'Dropped out of school at age 13'). fact(person42,family,Mother,name('Euphamia Connery')). fact(person42,family,Brother,name('Neil')). fact(person42,family,Son,name('Jason Joseph Connery')). fact(person42, occupation, "actor"). fact(person42, occupation, "director"). fact(person42, occupation, "model"). fact(person42, occupation, "producer"). … | Actor, born Thomas Connery on August

25, 1930, in Fountainbridge, Edinburgh,

Scotland, the son of a truck driver and

charwoman. He has a brother, Neil, born

in 1938. Connery dropped out of school

at age fifteen to join the British Navy.

Connery is best known for his portrayal of

the suave, sophisticated British spy, James

Bond, in the 1960s. … |

This dissertation is divided into the following chapters.

In this chapter, I discuss related work in Strategic Generation and learning in NLG. These topics are very broad; I have decided to focus on a small number of highly relevant papers. I first analyze related work in Content Selection (Section 2.1), in particular, the work on the ILEX (Cox et al., 1999, O’Donnell et al., 2001) and STOP (Reiter et al., 1997) projects. Afterwards, I introduce the Document Structuring task together with schemata and RST, its two most widespread document structuring solutions. I then relate my work to other recent learning efforts in NLG in Section 2.3. To conclude this chapter, I present related work in a number of assorted areas, including the relation between the strategic component and other modules of the NLG pipeline, planning diverse media, summarization and biography generation.

Content Selection, the task of choosing the right information to communicate in the output of a NLG system, has been argued to be the most important task from a user’s standpoint; users may tolerate errors in wording, as long as the information being sought is present in the text (Sripada et al., 2001). This task has different levels of complexity, with solutions requiring a full inferential engine in certain cases (Zukerman et al., 1996).

I will present here one of the most recent Content Selection algorithms in the literature, developed as part of the ILEX project and compare it to my two level Content Selection approach. In Section 2.1.2, I will summarize the knowledge acquisition process that the researchers in the STOP project pursued to build their Content Selection module. I will also compare that human acquisition with my the automated one described in Chapter 4. Some remarks regarding integrated or separated Content Selection close this section.

One of the most well-regarded integrated Content Selection algorithms proposed in the literature is the one used in the ILEX project (Cox et al., 1999, O’Donnell et al., 2001), a generation system that provides dynamic labels for exhibits in a Web-based museum gallery. ILEX tries to improve current static (fully pre-written) Web pages by means of Dynamic Hypertext (Dale et al., 1998), the marriage of NLG and hypertext systems. In Dynamic Hypertext, a generator will produce not only text that lives in the nodes of a hyper-linked environment, but also the links between these nodes.

In the ILEX Content Selection algorithm, each tuple coming from a relational database is transformed into an object definition where each of the entries in the object must be declared in a predicate definition (Figure 2.1). These definitions contain, among other things, a simplified user model composed of three items: interest (how appealing is this fact to the user, static), importance (contribution of the fact for an overall task, also static) and assimilation (level of acquaintance to the fact, dynamically updated). These numbers are hardwired in the predicate definition.

Their algorithm computes a relevant knowledge pool with an innovative relevancy metric. They follow the same line of work presented by (McKeown, 1985) Content Selection (pages 113–121, “Selection of relevant knowledge”), that is, to take the object being described and the entities directly reachable in the Content Potential, a graph with objects as nodes. ILEX also collects all entities relevant to the entity being described, with an innovative spreading-activation relevancy metric. In this metric, the relevancy of an object is given by the mathematical combination of the static importance of the object and the relevancy of the object in the path to the object being described. Their relevance calculation allows them to prioritize the top n most salient items, while maintaining coherence. Different links preserve relevance in different ways. ILEX authors assigned for each class of links hand-picked relevancy multipliers.

The ILEX Content Selection algorithm is complementary to my approach. For instance, my algorithm could be used to provide ILEX interest scores, an appealing topic for further work.

(Reiter et al., 1997)’s work addresses the rarely studied problem of knowledge acquisition for Content Selection in generation. Knowledge acquisition is used for STOP, a NLG system that generates letters encouraging people to stop smoking. STOP’s input is a questionnaire, filled out by a smoker; STOP uses the questionnaire to produce a personalized letter encouraging the smoker to quit. Reiter et al. explored four different knowledge acquisition techniques: directly asking experts for knowledge, creating and analyzing a corpus, structured group discussions and think aloud sessions. I detail each of them below.

The first technique they employed was to directly inquire domain experts for Content Selection knowledge, given that STOP was a multidisciplinary project with motivated experts within reach of the NLG team. This technique proved unsuccessful, as experts would usually provide “textbook style” responses (academic knowledge). Such knowledge differs very much from their actual knowledge as practitioners.

Their next effort focused on creating a small Text-Knowledge corpora (what they call a conventional corpus). They collected 11 questionnaire-letter pairs, with letters written by five different experts. Problems arised when comparing letters written by different doctors; the difference in style, length and content make this corpus very difficult to use.

Their final two methods were borrowed from the Knowledge Engineering literature (Buchanan and Wilkins, 1993) and proved to be very useful (structured group discussions and think-aloud sessions).

This research is relevant to my Content Selection work (presented in Chapter 4) where I propose automated mining of Content Selection rules from a Text-Knowledge corpus similar to the one Reiter et al. collected. Interestingly enough, they found the corpus approach insufficient. I find these possible explanations to their problem:

These problems are not present in my biographies work (neither in a number of domains that I have identified as suitable for application of my technique), where I have found hundreds of biography-knowledge pairs, written by professional biographers that have to adhere to a writing style.

While most classical approaches (Moore and Swartout, 1991, Moore and Paris, 1993) tend to perform the Content Selection task integrated with the Document Structuring, there seems to exist some momentum in the literature for a two-level Content Selection process (Lester and Porter, 1997, Sripada et al., 2001, Bontcheva and Wilks, 2001). For instance, (Lester and Porter, 1997) distinguish two levels of content determination: local content determination is the “selection of relatively small knowledge structures, each of which will be used to generate one or two sentences,” while global content determination is “the process of deciding which of these structures to include in an explanation.” Global content determination makes use of the concept of viewpoints, introduced by (Acker and Porter, 1994), which allow the generation process to be somewhat detached from the knowledge base (KB). My Content Selection rules, then, can be thought of as picking the global Content Selection items.

In the same vein, (Bontcheva and Wilks, 2001) use a Content Selection algorithm that operates at early stages of the generation process, allowing for further refinement down the pipeline. They present it as an example of techniques to overcome the identified problems in pipelined architectures for generation (they call this a recursive pipeline architecture, similar to (Reiter, 2000)).

Most recently, the interest in automatic, bottom-up content planners has put forth a simplified view where the information is entirely selected before the document structuring process begins (Marcu, 1997, Karamanis and Manurung, 2002, Dimitromanolaki and Androutsopoulos, 2003). While this approach is less flexible, it has important ramifications for machine learning, as the resulting algorithm can be made simpler and more amenable to learning. Nevertheless, two-level Content Selection can provide a broad restriction of the information to consider, with more fine grained, hand-built algorithms applied later on to select information in context or with length restrictions.

My work is in the schema tradition, with a two level Content Selection. The first, global level is performed with Content Selection rules and the second level is within the Document Structuring schema.

I will now address some generalities to the Document Structuring problem; namely, its output and overall algorithms employed, before introducing schemata and RST-based planners, the two most widely deployed solutions.

The output of the Document Structuring is a document plan, normally a tree or a sequence of messages. The relations between these messages usually are rhetorical in nature and employed later on to divide the discourse into textual units, such as paragraphs or bulleted lists (Bouayad-Agha et al., 2000) and sentences (Shaw, 2001, Cheng and Mellish, 2000). Most systems use trees as document plans; the RAGS consensus architecture (Cahill et al., 2000) defines the Rhetoric representation level as a tree. Sequences, on the other hand, are more restricted than trees, but for several applications present enough expressive power. Examples include the works of (Huang, 1994) and (Mellish et al., 1998). Sequences are important for my work as schemata, as defined originally by (McKeown, 1985), use sequences as document plans (when employed without schemata recursion). Moreover, even if trees have more momentum as document plans in the literature, several incompatibility results (Marcu et al., 2000, Bouayad-Agha et al., 2000), may suggest otherwise. The use of trees seem forced in some cases, for example (Mann and Thompson, 1988) claim that the rhetorical structure ought to be a tree in most of the texts. However, their Joint rhetorical relation seems to be just an ad hoc procedure to keep a tree from becoming a forest (Rambow, 1999). Finally, (Danlos et al., 2001) analyze cases where trees are not expressive enough. Their solution is to employ equational systems (actually directed acyclic graphs or DAGs) that increase expressivity by reusing rhetorical nodes.

The algorithm normally involves a search on the space of possible document plans. That is the case of Schemata-based, RST-based or opportunistic planners, which I discuss later in this section. However, several Document Structuring problems in the literature have been solved with no search, as in a good number of cases, planning is just a label for a stage solved by other means, as pointed out by (Rambow, 1999). Complex planning process examples include (Huang, 1994), working on automatic verbalization of the output of theorem provers and (Ansari and Hirst, 1998), working on generating instructional texts. In general, full planning is the most comprehensive solution to the document structuring problem, although it is expensive and requires modeling complex issues such as the intentional status of both hearer and speaker, and the full consequences of all actions that may not be necessary (or even feasible) in all domains of interest to NLG practitioners.

Another question being asked by previous research is the direction of the building of the plan. Normally, speed and ease of understanding motivates building top-down planners, e.g., (Young and Moore, 1994), which uses the Longbow AI planner (Young, 1996). However, other authors, for example (Marcu, 1997), see the whole planning process as a linking among facts by means of input-given RST-relations, an approach that is indeed bottom-up (I discuss opportunistic planners in Section 2.2.2). A hybrid approach is taken by (Huang, 1994), which combines a top-down (planned) approach with a bottom-up opportunistic perspective based on centering.

Several other approaches have been investigated. Besides the approaches I discuss later in this section, (Power, 2000) poses the problem as a constraint satisfaction and uses CSP techniques (Jaffar and Lassez, 1986) to solve it. (Knott et al., 1997) make a stand for the use of defeasible rules as a tool for planning coherent and concise texts. (Wolz, 1990) models the problems by having different AI-plans compete with each other to come out with a final decision (another mechanism for doing CSP). In my work, I learned schemata from data (a bottom-up approach), where the schemata are a type of planner that involves a local search during instantiation (top-down skeleton planners, as discussed by (Lim, 1992)).

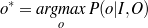

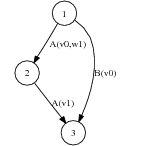

Traditionally, Strategic Generation problems have been addressed in NLG by means of two techniques: AI planners and Schemata. Reasoning can derive the text structure in some cases. In a scenario (Figure 2.2) borrowed from the work of (Rambow, 1999), a reasoning process 1 can derive the text shown in the figure, using, for example, operators derived from rhetorical relations (Section 2.2.2). Reasoning-based Strategic Generation focuses on domains where the structure can be fully deduced from the input data and the intentions of the speaker.

Not all text can be fully deduced from the input; some text has fixed structure coming from the domain. Even though I consider AI-style content planning a useful and interesting approach, many cases require special domain knowledge. For instance, if I know that an actor won three Oscars, was married twice and is 58 years old, common knowledge about biographies would dictate starting a biography with the age and occupation, leaving the rest of the information for later. Nothing intrinsic to age or awards specifies this ordering (it is not part of the domain knowledge itself). The text structure in formulaic domains presents very little intentional structure and is historically motivated; while its current form has logically evolved over time, there is no rationale behind it that can be used for planning. What is needed in such domains is domain knowledge related to its communicative aspects: Domain Communication Knowledge (DCK). DCK has been defined by (Kittredge et al., 1991) as:

(…) the means of relating domain knowledge to all aspects of verbal communication, including communicative goals and function. DCK is necessarily domain dependent. However, it is not the same as domain knowledge; it is not needed to reason about the domain, it is needed to communicate about the domain.

This knowledge can be explicitly or implicitly represented in a strategic component, depending on the judgement of the authors and on its importance for the scenario at hand. In most cases it is left implicitly represented. A notable exception is the work of (Huang, 1994), which defines the notion of proof communicative act (PCAs).

|

|

What is happening behind the scenes is that three different structures can be mapped to the discourse: informative, intentional and focus. In certain domains, one structure dominates the production of texts and a formalism based on only one of them can be enough to structure the text. (Rambow, 1999) proposes an integrated approach to deal with DCK and other issues.

With respect to intention, (Moore and Paris, 1993) (also (Hovy, 1988)) present a discussion of how to represent the beliefs of the Hearer and the intentions of the Speaker in instructional dialogs. Also, the degree of belief may be important to model as in the works of (Zukerman et al., 1996), (Walker and Rambow, 1994) and (Rambow, 1999). However, other approaches, e.g., (Moore and Paris, 1993) and (Young and Moore, 1994), prefer to consider belief as a binary (believe or disbelieve) datum. In my work, I do not represent nor use intentional structure, beyond the Report(Fact) type of intention, as part of simplifying assumptions to make Strategic Generation amenable for learning. An interesting area for future work is to incorporate intentional data coming from text by using current research in opinion identification (Zhou et al., 2003, Yu and Hatzivassiloglou, 2003). The matched text can be enriched with opinion and opinion polarity labels by an automatic opinion/polarity tagger. My system can then use this extra information to theorize Content Selection rules that take into account the agent’s stance toward certain facts (that information will need to be modelled outside of the learning system, using traditional cognitive modelling).

I will turn now to schemata-based document structuring.

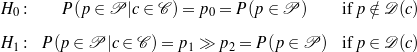

In this section, I present McKeown’s original schemata, then discuss KNIGHT, MAGIC and other schema-like systems. McKeown’s schemata are grammars with terminals in a language of rhetorical predicates, discussed below. The four schemata identified by McKeown in her work are the attributive, (Figure 2.3) identification, constituency, and compare and contrast schemata. The schemata accept recursion, as some entries inside the schema definition can be fulfilled directly with predicates or by other schemata. They are not fully recursive as only four schemata are proposed and there are 23 predicates, although McKeown expressed her belief that the formalism can be possibly extended to full recursion.

| Attributive Schema |

Attributive {Amplification; Restriction} Particular-illustration* {Representative} {Question; Problem; Answer} / {Comparison; Contrast; Adversative} Amplification/Explanation/Inference/Comparison |

Her predicates are important for my work as they contain a major operational part, the search for associated knowledge. When the schema reaches a rhetorical predicate such as attribute, it fills it with required arguments, e.g., the entity the attribute belongs to. From there, the rhetorical predicate (actually a piece of Lisp code) will perform a search on the relevant knowledge pool for all possible attributes to the given entity. These instantiated predicates (messages in this dissertation) will be available for the schema-instantiation mechanism to choose (by virtue of her focus mechanism, explained below) and then build the document plan.

Schemata are then structure and predicates. My work focuses in learning the structure but not the predicates (I basically learn only part of the schemata, in a sense). Which predicates to use and how to define them is an important part of the schema that I thus assume to be part of the input to my learning system. This is a point of divergence with McKeown’s schemata that used predicates rhetorical in nature. My predicates are domain dependent, what makes creating these predicates a process worth automating. As a step in that direction, I have been able to provide a declarative version of these predicates in a constraint satisfaction formalism (discussed in Chapter 5, Section 5.1).

To implement the schemata, McKeown employed Augmented Transition Networks (ATNs) an extensible declarative formalism where some of her requirements used these extensions. In her system, the rhetorical predicates are executed when traversing different arcs. Both the actions in the arcs and the conditions are arbitrary pieces of Lisp code. An example ATN (Figure 2.4) shows a fair degree of possibilities at each node (seen by the number of arcs leaving each state). One contribution from McKeown’s work is to use focus to guide the local decision of which arc to traverse at each moment. I will discuss her focus mechanism now.

(McKeown, 1985) contains a full chapter (“Focusing in Discourse,” pages 55–81) describing the importance of centering theory and proposing a motivated solution for discourse planning. She built on the works of both Grosz (global focus) and Sidner (local focus) to extend their interpretation theories with decision heuristics for generation. McKeown’s work on focus is a major ingredient of her schemata and has been adapted for use in other generation systems (e.g., (Paris, 1987)’s TAILOR generator). However, few schema-like planners mentioned in the literature include this piece of the schema. I find that a major oversight of later followers of McKeown’s work.

As pointed out by (Kibble and Power, 1999), research on focus in understanding is interested in discourse comprehension, mostly solving cases of anaphora, e.g., pronominalization. Most centering theories, therefore, provide tools to cut down the candidate search space for anaphora resolution. Nevertheless, the generation case is different, as these theories lack insights of how to choose among the set of candidates. Understanding does not require these decisions, as they have been taken by the human author in the text already. McKeown complemented centering theories with heuristics suitable for generation. For recent work on centering, (Karamanis, 2003) presents a learning approach to the problem.

McKeown presents heuristics for two decision problems involving focus. In the first problem, the system has to decide between continuing speaking about the current focus or switching to an entity in the previous potential focus list. Her heuristic in that case is to switch, otherwise the speaker will have to reintroduce the element of the potential focus list at a later point. The next decision arises when deciding whether to continue talking about the current focus or switching back to an item in the focus stack. Here her heuristic was to stick to the current focus, to avoid false implication of a finished topic.

This is, grosso modo, McKeown’s treatment of focus. The details are more complex but I will skip them as I use McKeown’s focus mechanism without any modifications. Her focus mechanism is important because my machine learning mechanism interacts with it and has to learn schemata in spite of the actual focus system.

(Lester and Porter, 1997) were interested in robust explanation systems for complex phenomena. When designing their Document Structuring module, they focused on two issues, expressiveness (the Document Structuring module should be able to perform its duties), and Discourse-Knowledge Engineering (DCK requires expertise to be acquired and represented, see Section 2.2, they were particularly interested in providing tools to support this vision). This last issue arise after working in an non-declarative Explanation Planning module for a period of time. As the module grew larger, adding new functionality or understanding existing ones became an issue as the actual logic was buried under lines and lines of Lisp code.

To solve this problem, Lester and Porter took the most declarative pieces of their approach and moved it to the knowledge representation system, creating the Explanation Design Packages (EDP). EDPs are trees represented as frames in a knowledge representation. The frames encapsulate a good deal of Lisp code, defining local variables, conditions over local and global variables, invoking KB accessors and arbitrary functions. They are as rich as a programming language.

When compared to schemata, it seems that Lester and Porter arrive at a similar solution coming from the opposite direction. McKeown analyzed a number of texts and background work in rhetorical predicates to hypothesize her schemata as a suitable representation of discourse that she later operationalized using ATNs. As ATNs are of a hybrid declarative/procedural nature, extensions can be coded in by means of extra Lisp code. Her schemata required using some of these extensions. McKeown, therefore, arrived at a hybrid declarative/procedural implementation starting from a fully declarative problem. Lester and Porter, on the other hand, started with a fully procedural implementation and further structured and simplified it until they arrived to their hybrid declarative/procedural EDPs.

True to their roots, EDPs have a more procedural flavor, but that does not avoid making a direct comparison with schemata. The frames in EDP correlate roughly to schemata’s states. Note, however, that there are no cycles on the EDPs (as they are trees). The loops in the schemata are represented in EDP by an iteration mechanism. More interestingly, the KB accessor functions behave operationally exactly the same as McKeown’s predicates. The major difference (and this may be the reason why Lester and Porter did not draw this parallelism) is that McKeown stressed the rhetorical nature of her predicates. KNIGHT predicates are not rhetorical, but domain dependent; this is an approach I follow in my work as well. Finally, both approaches allow plugging a sizeable amount of extra Lisp code into their formalisms. EDPs provide more places to do so, while the ATNs concentrated this in the arc transitions.

A key distinction is that, while schemata are skeleton-planners because they contain the skeleton of a plan but they perform a local search (driven by McKeown’s focus mechanism) to arrive to the final plan (Lim, 1992), EDPs lack any type of search. Lester and Porter did not mention this fact on their work and it is clear they did not need such a mechanism for their robust generation approach.

EDPs expand schemata by providing a hierarchical organization of the text that is suitable for multi-paragraph texts. Moreover, they include a prioritization model that makes for a bare-bones user model (but does not compare to either the TAILOR (Paris, 1987) or ILEX (O’Donnell et al., 2001) treatment of the issue) and a number of well-defined non-rhetorical predicates for natural entities and processes. However, Lester and Porter followed a more procedural extension of the schemata, which makes them unsuitable for my learning approach.